Visualizing features, receptive fields, and classes in neural networks from "scratch" with Tensorflow 2. Part 4: DeepDream and style transfer

Posted on Tue 19 May 2020 in Neural networks

Summary¶

In the last section I talked about reconstructing images based upon their layer activations at different levels of a deep neural network. This time, we're going to try to modify images by transferring styles between different layers of the network. All the figured here are generated from a single self-contained Jupyter notebook.

There are a few sections to this series:

Deepdream, modifying images with neural network filters and classes¶

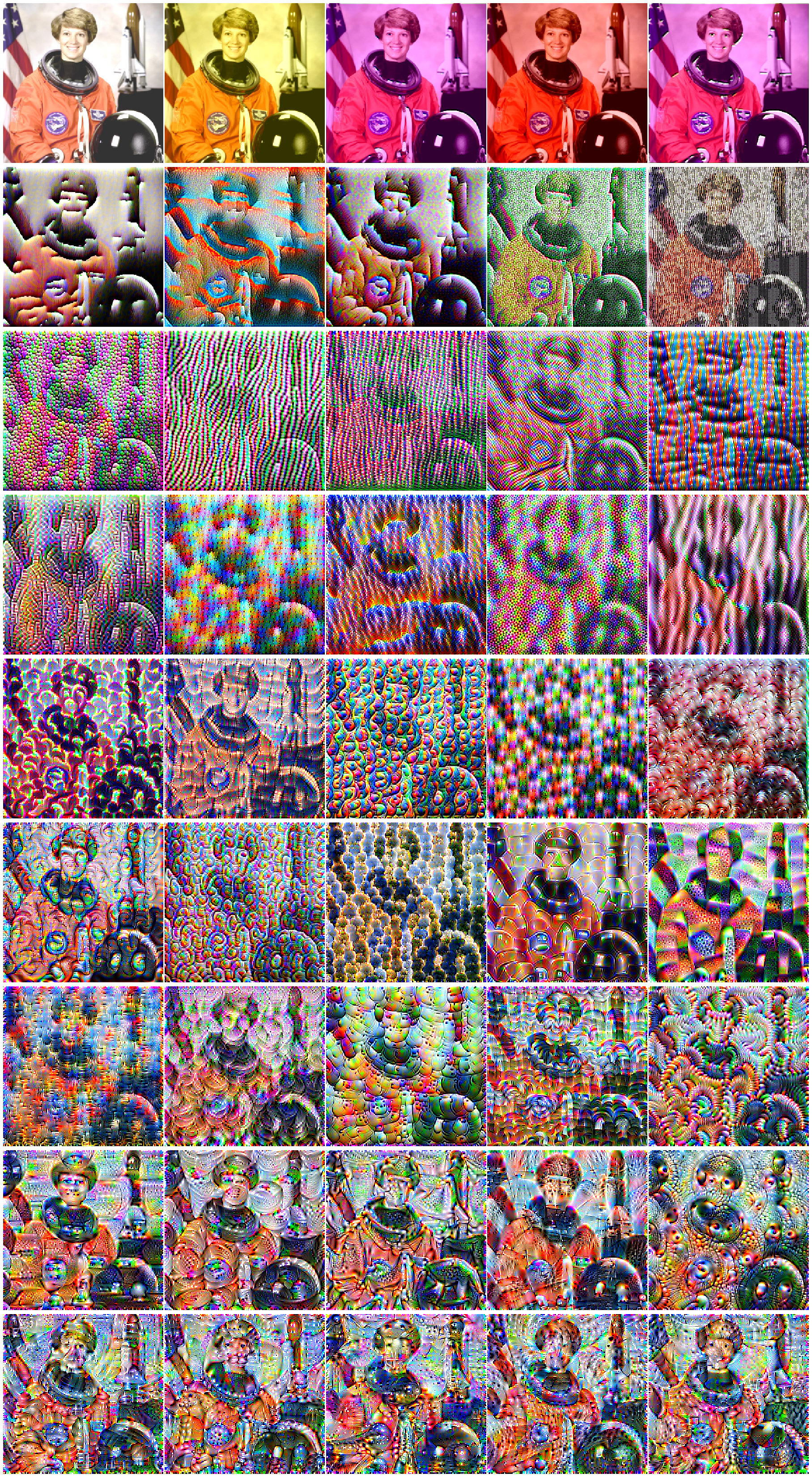

In the second section, we synthesized images by generating an image of noise, and modifying that image until it maximally activated a neuron/filter in a deep neural network. Here, we want to use those filters to modify images. To do that, instead of starting with noise, we can try starting from an image. The midpoint between the starting and the generated image can have features that look styled by the CNN's filters:

Stylizations of an image of Astronaut Eileen Collins by modifying the activations of neurons at different levels of the VGG16 CNN architecture.

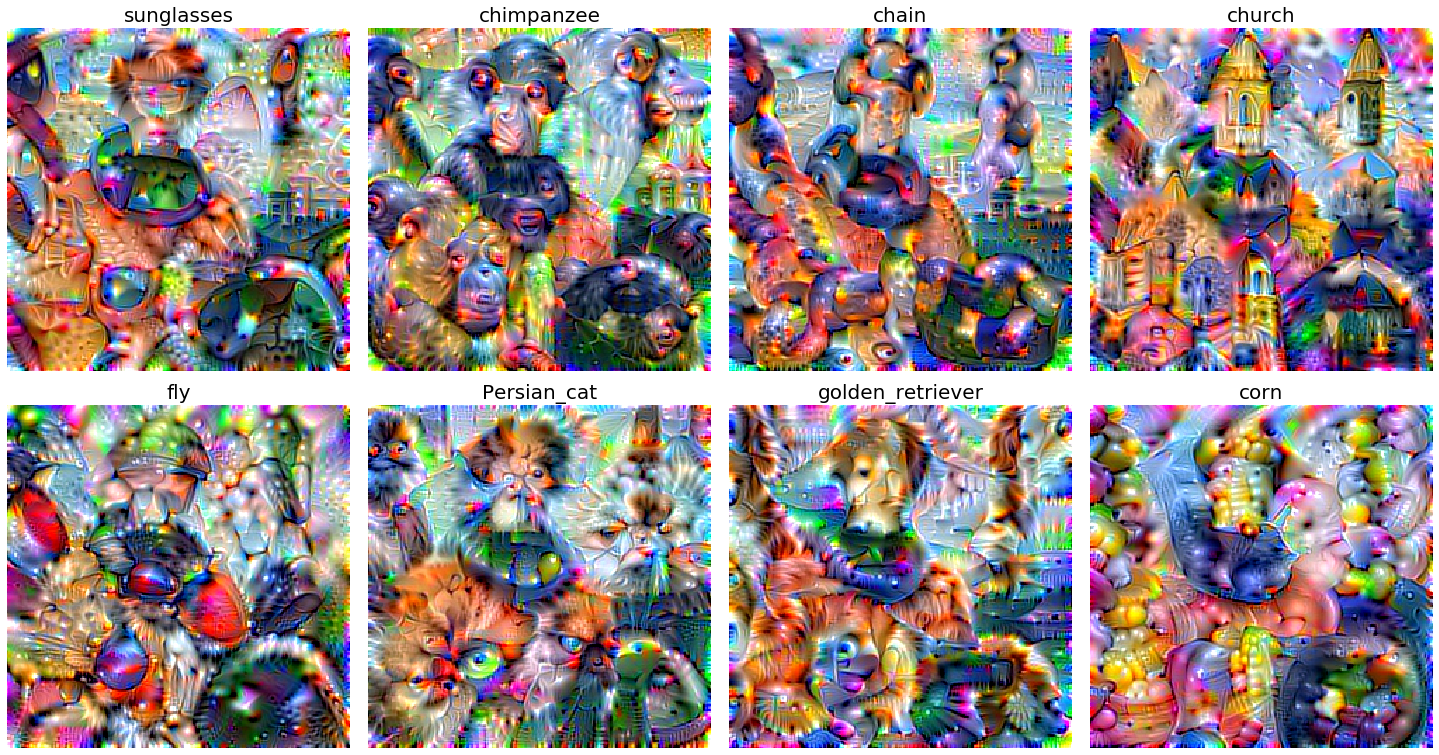

We can do the same thing with feature classes, by activating the final layer of the network:

Stylizations of an image of Astronaut Eileen Collins by modifying the activations of neurons at the class layer of the VGG16 CNN architecture.

We can also visualize those classes during each step of gradient ascent.

Manipulation of an image of Astronaut Eileen Collins, to class activations in the final layer of VGG16.

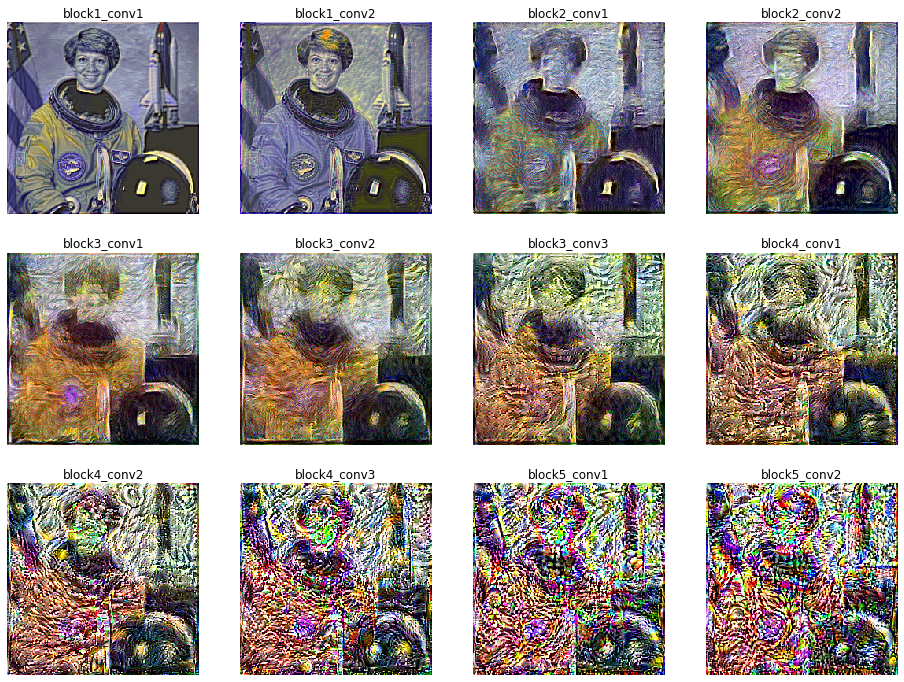

Style transfer¶

We can also manipulate the style of the image to match a second image, called style transfer. There are many papers working on this. The approach here is simple, and could be extended in many ways (even just performing style transfer over multiple layers simultaneously).

Here, we transfer the activation profile of an image of Astronaut Eileen Collins to an image of the painting The Starry Night by Van Gogh.

Stylizations of an image of Astronaut Eileen Collins by modifying the activations of neurons at the class layer of the VGG16 CNN architecture.

All the code needed to reproduce these images and videos are available in self contained Jupyter notebooks.

That's it! This is the final section. Thanks for reading.