Visualizing features, receptive fields, and classes in neural networks from "scratch" with Tensorflow 2. Part 3: Reconstructing images from layer activations

Posted on Tue 19 May 2020 in Neural networks

Summary¶

In the last section I talked about visualizing neural network featres by synthesizing images to maximally activate artificial neurons, and looking at activations to specific images, to try to see what those neurons are doing. This time, we're going to see how much information is contained in neurons in different layers of the network by trying to reconstruct images based upon the original layer activations. All the figured here are generated from a single self-contained Jupyter notebook.

There are a few sections to this series:

Synthesizing reconstructed images based upon their activations in different layers of a neural network¶

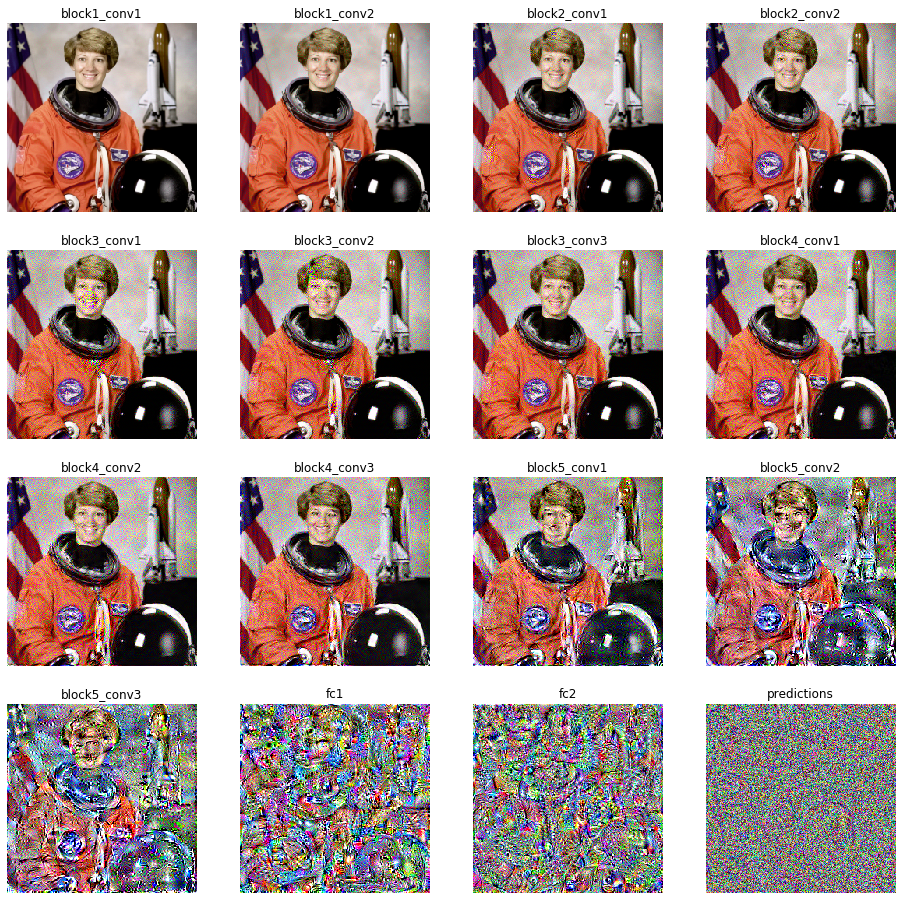

The final thing I wanted to try was reconstructing the image based upon activations in different layers of the neural network. To do this, I start with white noise, then perform the same optimization steps as in the maximum activation sections, but instead of maximally activating a neuron, I try to get the neurons activation across each layer to be identical to the activation of the original image. In the first few layers, you get perfect reconsdtruction. Further on into the network, after spatial information is lost from max-pooling layers, there is some image distortion. Finally, at the latest layers, it appears as if no information is present.

Reconstructions of an image of Astronaut Eileen Collins based only upon the activations of neurons at each layer of the VGG16 neural network. Reconstructed images are produced by modifying the image to produce the same layer-activations as in the original image.

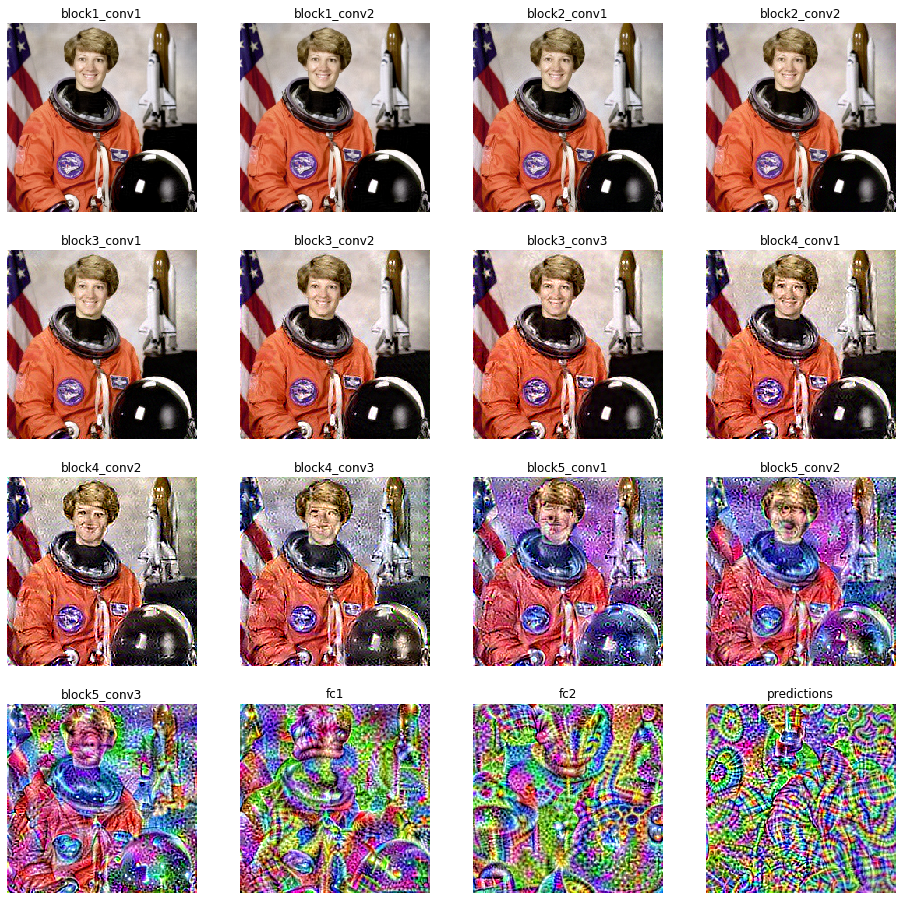

I also played around with using some of the techniques in the class visualization to smooth the image and look for higher level features. This helped in finding at least a little bit more structure in the later layers.

Reconstructions of an image of Astronaut Eileen Collins based only upon the activations of neurons at each layer of the VGG16 neural network. Reconstructed images are produced by modifying the image to produce the same layer-activations as in the original image. These reconstructions use smoothing, and some normalization with each iteration.

All the code needed to reproduce these images and videos are available in self contained Jupyter notebooks.

In the next section, we'll use the methods from the first three sections to transfer style to images using methods similar to DeepDream.